Generate New Template

First, I would like to express my condolences to the families of MH370, no matter what the conclusion from these videos they all want closure and we should be mindful of these posts and how they can affect others.

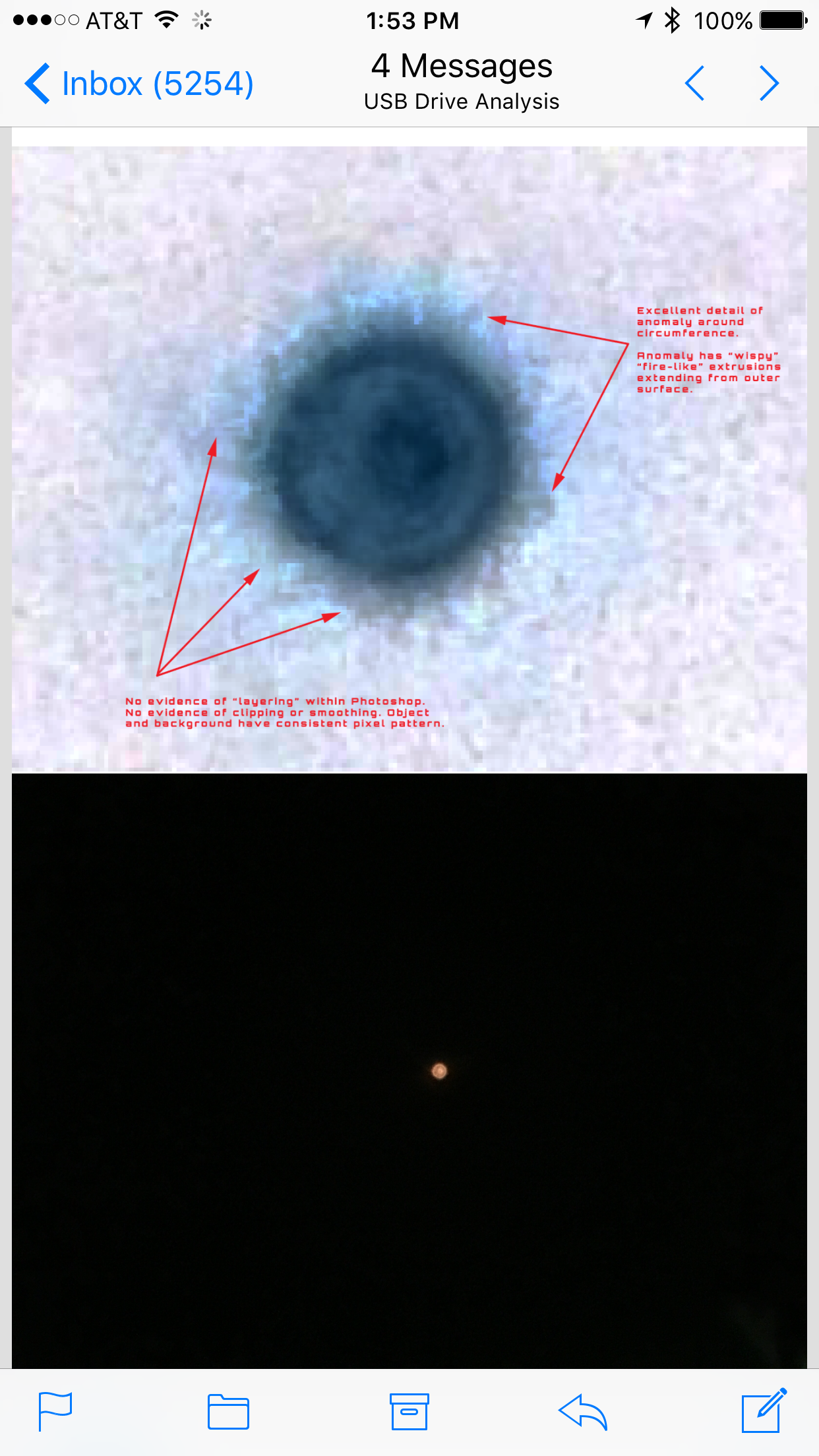

I have been following and compiling and commenting on this matter since it was re-released. I have initial comments (here and here) on both of the first threads and have been absolutely glued to this. I have had a very hard time debunking any of this, any time I think I get some relief, the debunk gets debunked.

Sat Video Contention

There has been enormous discussion around the sat video, it’s stereocopic layer, noise, artifacts, fps, cloud complexity, you name it.

Since we have a lot of debunking threads on this right now I figured I would play devils advocate.

Stereoscopic

I myself went ahead and converted it into a true 3D video for people to view on youtube. Viewing it does look like it has depth data and this post here backs it up with a ton of data. There does seem to be some agreement that this stereo layer has been generated through some hardware/software/sensor trickery instead of actually being filmed and synced from another imaging source. I am totally open to the stereo layer being generated from additional depth data instead of a second camera. This is primarily due to the look of the UI on the stereo layer and the fact that there is shared noise between both sides. If the stereo layer is generated it would pull the same noise into it..

Exactly Like This: https://youtu.be/NssycRM6Hik?t=110

Noise/Artifacts/Cursor & Text Drift

So this post here seemed to have some pretty damning evidence until I came across a comment thread here. I don’t know why none of us really put this together beforehand but it seems like these users of first hand knowledge of this interface.

This actually appears to be a screencap of a remote terminal stream. And that would make sense as it’s not like users would be plugged into the satellite or a server, they would be in a SCIF at a secure terminal or perhaps this is from within the datacenter or other contractor remote terminal. This could explain all the subpixel drifting due to streaming from one resolution to another. It would explain the non standard cursor and latency as well. Also this video appears to be enormous (from the panning) and would require quite the custom system for viewing the video.

Citrix HDX/XenDesktop

It is apparent to many users in this discussion chain that this is a Citrix remote terminal running at default of 24fps.

XenDesktop 4.0 created in 2014 and updated in 2016.

Near the top they say “With XenDesktop 4 and later, Citrix introduced a new setting that allows you to control the maximum number of frames per second (fps) that the virtual desktop sends to the client. By default, this number is set to 30 fps.”

Below that, it says “For XenDesktop 4.0: By default, the registry location and value of 18 in hexadecimal format (Decimal 24 fps) is also configurable to a maximum of 30 fps”.

Also the cursor is being remotely rendered which is supported by Citrix. Lots of people apparently discuss the jittery mouse and glitches over at /r/citrix. Citrix renders the mouse on the server then sends it back to the client (the client being the screen that is screencapped) and latency can explain the mouse movements. I’ll summarize this comment here:

The cursor drift ONLY occurs when the operator is not touching the control interface. How do I know this? All other times the cursor stops in the video, it is used as the point of origin to move the frame; we can assume the operator is pressing some sort of button to select the point, such as the right mouse button.

BUT When the mouse drift occurs, it is the only time in the video where the operator “stops” his mouse and DOESN’T use it as a point of origin to move the frame.

Here are some examples of how these videos look and artifacts are presented:

XenDesktop 4.0 running on someone’s computer

XenDesktop 7.6 playing Battlefield 4. Not the exact same software but have a look at the “Activate Windows” text in the corner. Do you see what I see?

XenDesk 7.5 from 2014 running at 22 FPS – Similar cursor movement?

So in summary, if we are taking this at face value, I will steal this comment listing what may be happening here:

Screen capture of terminal running at some resolution/30fps

Streaming a remote/virtual desktop at a different resolution/24fps Viewing custom video software for panning around large videos

Remotely navigating around a very large resolution video playing at 6fps

Recorded by a spy satellite

Possibly with a 3D layer

To me, this is way too complex to ever have been thought of by a hoaxer, I mean good god. How did they get this data out of the SCIF is a great question but this scenario is getting more and more plausible, and honestly, very humbling. If this and the UAV video are fabrications, I am floored. If they aren’t, well fucking bring on disclosure because I need to know more.

Love you all and amazing fucking research on this. My heart goes out to the families of MH370. <3

edit: resolution

edit2: noise

submitted by /u/TachyEngy

[link] [comments]